k8s去docker,1.24版本移除 Dockershim https://kubernetes.io/zh-cn/blog/2022/02/17/dockershim-faq/

kubernetes

准备

1.26.1 需要>=go1.19 go env

1 | wget https://github.com/golang/go/archive/refs/tags/go1.19.tar.gz |

#编译指定组件

$ make WHAT=cmd/kubelet

+++ [0325 21:01:36] Building go targets for linux/amd64

k8s.io/kubernetes/cmd/kubelet (non-static)$ make kubectl kubeadm kubelet

+++ [0325 21:19:43] Building go targets for linux/amd64

k8s.io/kubernetes/cmd/kubectl (static)+++ [0325 21:21:23] Building go targets for linux/amd64

k8s.io/kubernetes/cmd/kubeadm (static)+++ [0325 21:19:45] Building go targets for linux/amd64

k8s.io/kubernetes/cmd/kubelet (non-static)#不同机器编译的 GLIBC_2.34版本不同,存在不兼容情况

KUBE_BUILD_PLATFORMS=linux/amd64 make WHAT=cmd/kubelet GOFLAGS=-v GOGCFLAGS=”-N -l”

network

1 | cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf |

swap/firewalld

1 | swapoff -a |

1 | str=$(./bin/kubeadm --image-repository registry.aliyuncs.com/google_containers config images list) |

for i in $(docker images | grep ‘v1.26.1’ | awk ‘BEGIN{OFS=”:”}{print $1,$2}’); do echo docker push $i ;done

CRI

https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/

containerd

kubelet

kubelet.env

1 | cat > /opt/kubernetes/kubelet.env <<EOF |

systemctl status docker

……

CGroup: /system.slice/docker.service

├─1262 /usr/bin/dockerd

└─1476 containerd –config /var/run/docker/containerd/containerd.toml –log-level debug#containerd.toml

[grpc]

address = “/var/run/docker/containerd/containerd.sock”

kubelet.service

1 | cat >/usr/lib/systemd/system/kubelet.service <<EOF |

1 | systemctl daemon-reload && systemctl enable kubelet |

使用自定义的镜像

1 | kubeadm config print init-defaults>kubeadm.yaml |

$ kubeadm config images list

registry.k8s.io/kube-apiserver:v1.28.3

registry.k8s.io/kube-controller-manager:v1.28.3

registry.k8s.io/kube-scheduler:v1.28.3

registry.k8s.io/kube-proxy:v1.28.3

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.9-0

registry.k8s.io/coredns/coredns:v1.10.1

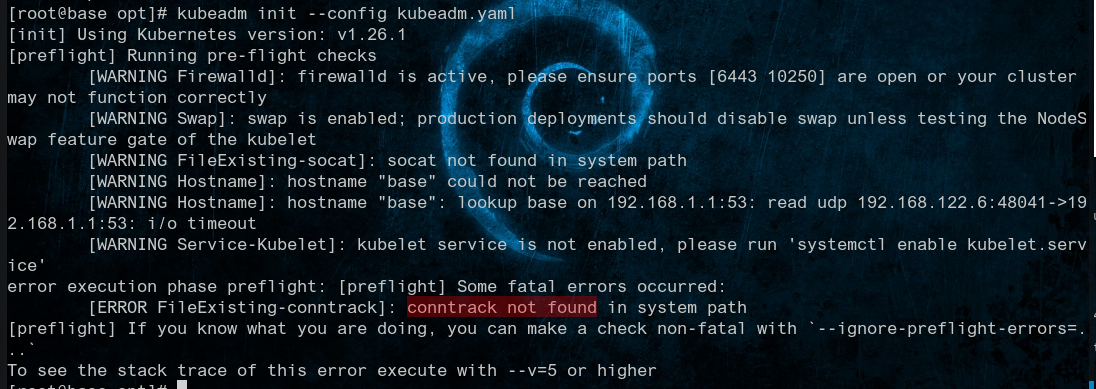

初始化

依赖centos

1 | yum install --downloadonly --downloaddir=/tmp/pages conntrack-tools |

socat

1 | # rpm -ivh /tmp/pages/socat-1.7.3.2-2.el7.x86_64.rpm |

特点就是在两个数据流之间建立通道

http://mirror.centos.org/centos/7/os/x86_64/Packages/socat-1.7.3.2-2.el7.x86_64.rpm

conntrack

1 | # rpm -ivh /tmp/pages/libnetfilter_*.rpm |

跟踪并且记录连接状态

conntrack-tools http://mirror.centos.org/centos/7/os/x86_64/Packages/conntrack-tools-1.4.4-7.el7.x86_64.rpm

依赖

http://mirror.centos.org/centos/7/os/x86_64/Packages/libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

http://mirror.centos.org/centos/7/os/x86_64/Packages/libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm

http://mirror.centos.org/centos/7/os/x86_64/Packages/libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

依赖debian

1 | sudo apt install conntrack socat ethtool |

init

自定义初始化

https://kubernetes.io/zh-cn/docs/reference/setup-tools/kubeadm/kubeadm-init/#config-file

https://kubernetes.io/zh-cn/docs/reference/config-api/kubeadm-config.v1beta3/

1 | #主节点 |

init-kubeadm.yaml折叠

/opt/init-kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: ujogs9.ntea26wujtca8fjb

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.122.11

bindPort: 6443

certificateKey: 228bfe0e01e9456c981455b81abad41a067b9ca31bf9f91a692a778115cb9b7a

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s01

taints: null

---

apiServer:

extraArgs:

etcd-servers: https://192.168.122.11:2379,https://192.168.122.12:2379,https://192.168.122.13:2379

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: cs

controllerManager:

extraArgs:

"allocate-node-cidrs": "true"

"cluster-cidr": "121.21.0.0/16"

"node-cidr-mask-size": "20"

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.org/k8s

kind: ClusterConfiguration

kubernetesVersion: 1.26.1

controlPlaneEndpoint: k8s.org:6443

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 121.21.0.0/16

scheduler:

extraArgs:

log-flush-frequency: 6s

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 121.21.0.0

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: "121.21.0.0"

AllocateNodeCIDRs: true

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocal:

bridgeInterface: ""

interfaceNamePrefix: ""

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

localhostNodePorts: null

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: "rr"

strictARP: false

syncPeriod: 30s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

winkernel:

enableDSR: false

forwardHealthCheckVip: false

networkName: ""

rootHnsEndpointName: ""

sourceVip: ""

初始化详情

初始化过程

[root@base ~]# kubeadm init --config /opt/kubeadm.yaml

[init] Using Kubernetes version: v1.26.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [base kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.122.6]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [base localhost] and IPs [192.168.122.6 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [base localhost] and IPs [192.168.122.6 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 26.502067 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node base as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node base as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.122.6:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:a5b9ef128064a6c279cd2dc0d738ae2b4d4d8d993ccead6f7e2e9b8ec0d2d9d7

运行 kubectl

https://kubernetes.io/zh-cn/docs/tasks/tools/install-kubectl-linux/

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

The connection to the server localhost:8080 was refused - did you specify the right host or port? 需要配置

非root用户

1 | mkdir -p $HOME/.kube |

root用户

1 | # echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile |

密钥

https://kubernetes.io/zh-cn/docs/reference/setup-tools/kubeadm/kubeadm-token/#cmd-token-create

kubeadm join k8s.org:6443 –token $1 \

–discovery-token-ca-cert-hash sha256:$2 \

–control-plane –certificate-key $3

token

1 | kubeadm token generate |

kubeadm token list #查看token

discovery-token-ca-cert-hash

1 | openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa \ |

certificate-key

1 | #--upload-certs certificateKey |

W0314 19:18:31.841138 5585 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL “https://dl.k8s.io/release/stable-1.txt": Get “https://dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W0314 19:18:31.841320 5585 version.go:105] falling back to the local client version: v1.26.1

[upload-certs] Storing the certificates in Secret “kubeadm-certs” in the “kube-system” Namespace

[upload-certs] Using certificate key:

58c8e04a2e479d3f10274eff43988d626f627e49ecc1ebb3463f8aedf50ccfdased -n “/certificateKey/s/:.*/: 58c8e04a2e479d3f10274eff43988d626f627e49ecc1ebb3463f8aedf50ccfda/“p /opt/join.yaml

1 | kubeadm init phase upload-certs --upload-certs --config=SOME_YAML_FILE |

join

command

master

1 | kubeadm join k8s.org:6443 --token $1 \ |

kubeadm join k8s.org:6443 –token l860je.2f4ox4tb166kui7l –discovery-token-ca-cert-hash sha256:4533b6361be151af712c014c0b1c2eb52f902f52ff292f63ccc258c58d9e59be –control-plane –certificate-key 56a92754164260b76bbe525f0e60059b6eea38d2ef07f42231a2c3bebfd18bbf

node

1 | #重新生成 |

kubeadm join k8s.org:6443 –token q3paz2.w5zohvudzrsltrh3 –discovery-token-ca-cert-hash sha256:8f1ac78f64629c049a2e0e8a8e8fa83f29e92e6801ca1a1d27cc3e06ecef9943

controlPlane

1 | #第一个主节点 |

kubeadm config print join-defaults –component-configs KubeProxyConfiguration

初始化文件

init-kubeadm.yaml

cat /opt/init-kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

caCertPath: /etc/kubernetes/pki/ca.crt

discovery:

bootstrapToken:

apiServerEndpoint: k8s.org:6443

token: yl0hvd.mv683yn2rljdrigk

unsafeSkipCAVerification: true

timeout: 5m0s

tlsBootstrapToken: yl0hvd.mv683yn2rljdrigk

kind: JoinConfiguration

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s01

taints: null

controlPlane:

localAPIEndpoint:

advertiseAddress: 192.168.122.11

bindPort: 6443

certificateKey: 66b4704450dd461f02f56c81b9c323363c0e2a077a1a5619aaab7b58eb953be9

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.org/k8s

kind: ClusterConfiguration

kubernetesVersion: 1.26.1

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

controlPlaneEndpoint: k8s.org:6443 #集群

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

failSwapOn: false

# clusterDNS:

# - 10.96.0.10

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

ipvs:

minSyncPeriod: 0s

scheduler: "rr"

syncPeriod: 30s

mode: "ipvs"

控制面板初始化详情

kubeadm join --config /opt/join.yaml

W0319 19:08:00.540407 31325 initconfiguration.go:305] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeproxy.config.k8s.io", Version:"v1alpha1", Kind:"KubeProxyConfiguration"}: strict decoding error: unknown field "AllocateNodeCIDRs"

W0319 19:08:00.541712 31325 configset.go:177] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeproxy.config.k8s.io", Version:"v1alpha1", Kind:"KubeProxyConfiguration"}: strict decoding error: unknown field "AllocateNodeCIDRs"

W0319 19:08:00.543375 31325 utils.go:69] The recommended value for "clusterCIDR" in "KubeProxyConfiguration" is: 121.21.0.0/16; the provided value is: 121.21.0.0

W0319 19:08:00.543398 31325 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.96.0.10]; the provided value is: [121.21.0.0]

[init] Using Kubernetes version: v1.26.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s.org k8s01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.122.11]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s01 localhost] and IPs [192.168.122.11 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s01 localhost] and IPs [192.168.122.11 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 18.528146 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: ujogs9.ntea26wujtca8fjb

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join k8s.org:6443 --token ujogs9.ntea26wujtca8fjb \

--discovery-token-ca-cert-hash sha256:a51e48270ff979d72adbedc004ba7aad363623120ed9e24e9a6409e2ba5fde37 \

--control-plane --certificate-key 228bfe0e01e9456c981455b81abad41a067b9ca31bf9f91a692a778115cb9b7a

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s.org:6443 --token ujogs9.ntea26wujtca8fjb \

--discovery-token-ca-cert-hash sha256:a51e48270ff979d72adbedc004ba7aad363623120ed9e24e9a6409e2ba5fde37

直接在其他控制面板执行报错

error execution phase control-plane-prepare/download-certs: error downloading certs: error downloading the secret: secrets “kubeadm-certs” is forbidden: User “system:bootstrap:ujogs9” cannot get resource “secrets” in API group “” in the namespace “kube-system”

执行命令生成certificatekey,用新生成的替换原有值在其他控制面板执行加入

1 | kubeadm init phase upload-certs --upload-certs |

W0319 19:11:21.959770 32656 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL “https://dl.k8s.io/release/stable-1.txt": Get “https://dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W0319 19:11:21.960020 32656 version.go:105] falling back to the local client version: v1.26.1

[upload-certs] Storing the certificates in Secret “kubeadm-certs” in the “kube-system” Namespace

[upload-certs] Using certificate key:

0240fc6ba87ada32042b73469103b5577df42603289429ea38f0c5789fc6f9c7

join 控制模板初始化详情

kubeadm join k8s.org:6443 --token ujogs9.ntea26wujtca8fjb \

--discovery-token-ca-cert-hash sha256:a51e48270ff979d72adbedc004ba7aad363623120ed9e24e9a6409e2ba5fde37 \

--control-plane --certificate-key 228bfe0e01e9456c981455b81abad41a067b9ca31bf9f91a692a778115cb9b7a

[preflight] Running pre-flight checks

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0319 19:10:37.146702 22564 utils.go:69] The recommended value for "clusterCIDR" in "KubeProxyConfiguration" is: 121.21.0.0/16; the provided value is: 121.21.0.0

W0319 19:10:37.146747 22564 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.96.0.10]; the provided value is: [121.21.0.0]

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

error execution phase control-plane-prepare/download-certs: error downloading certs: error downloading the secret: secrets "kubeadm-certs" is forbidden: User "system:bootstrap:ujogs9" cannot get resource "secrets" in API group "" in the namespace "kube-system"

To see the stack trace of this error execute with --v=5 or higher

[root@k8s03 ~]# kubeadm join k8s.org:6443 --token ujogs9.ntea26wujtca8fjb --discovery-token-ca-cert-hash sha256:a51e48270ff979d72adbedc004ba7aad363623120ed9e24e9a6409e2ba5fde37 --control-plane --certificate-key 0240fc6ba87ada32042b73469103b5577df42603289429ea38f0c5789fc6f9c7

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0319 19:11:33.434784 22613 utils.go:69] The recommended value for "clusterCIDR" in "KubeProxyConfiguration" is: 121.21.0.0/16; the provided value is: 121.21.0.0

W0319 19:11:33.434827 22613 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.96.0.10]; the provided value is: [121.21.0.0]

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki"

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s03 localhost] and IPs [192.168.122.13 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s03 localhost] and IPs [192.168.122.13 127.0.0.1 ::1]

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s.org k8s03 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.122.13]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[mark-control-plane] Marking the node k8s03 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s03 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

yaml

截止v1.26都是beta版本

https://kubernetes.io/zh-cn/docs/reference/setup-tools/kubeadm/kubeadm-join/

controlPlane

1 | #后续控制面板 controlPlane |

配置中需要有controlPlane

待补充

worker

config

join-worker.yaml

join-worker

apiVersion: kubeadm.k8s.io/v1beta3

caCertPath: /etc/kubernetes/pki/ca.crt

discovery:

bootstrapToken:

apiServerEndpoint: kube-apiserver:6443

token: abcdef.0123456789abcdef

unsafeSkipCAVerification: true

timeout: 5m0s

tlsBootstrapToken: abcdef.0123456789abcdef

kind: JoinConfiguration

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: debian

taints: null

1 | kubeadm join --skip-phases=preflight --config=./join-kubeadm.yaml |

kubeadm token list

dns配置涉及kube-proxy参数clusterDNS

1 | kubeadm config print join-defaults --component-configs KubeProxyConfiguration |

config.yaml

cat /var/lib/kubelet/config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.1.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

sed -i ‘s/121.21.0.0/10.96.1.10/‘ /var/lib/kubelet/config.yaml

discovery-file

kubeadm join --discovery-file path/to/file.conf(本地文件)kubeadm join --discovery-file https://url/file.conf(远程 HTTPS URL)

发现文件的格式为常规的 Kubernetes kubeconfig 文件

运行 kubectl

https://kubernetes.io/zh-cn/docs/tasks/tools/install-kubectl-linux/

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

The connection to the server localhost:8080 was refused - did you specify the right host or port? 需要配置

非root用户

1 | mkdir -p $HOME/.kube |

root用户

1 | # echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile |

etcd

find /tmp/ -name ca.key -type f

/tmp/pki/etcd/ca.key

/tmp/pki/ca.key

flannel

ContainerCreating

edit

1 | kubectl edit cm kube-proxy -n kube-system |

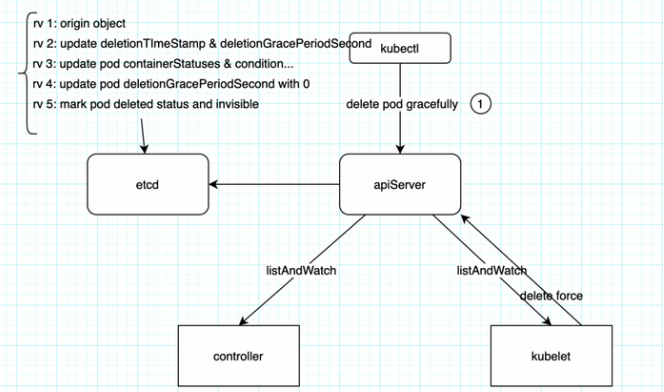

删除pod

- • k8s garbage-collection

- • k8s finalizers

1 | kubectl delete pod xxx -n kube-system --grace-period=0 --force |

1 | $ kubectl proxy #127.0.0.1:8001 |

1 | ❯ kubectl get pod -n mysql-operator |

卸载清理

1 | kubeadm reset -f |

证书过期

1 |

|

1 | kubeadm join k8s.org:6443 --config /opt/join.yaml |

1 |

Failed to get system container stats” err=”failed to get cgroup stats for "/systemd": failed to get container info for "/systemd": unknown container "/systemd"“ containerName=”/systemd”

https://github.com/kubernetes/kubernetes/issues/56850

https://github.com/kubermatic/machine-controller/pull/476

https://github.com/kubernetes/kubernetes/issues/56850#issuecomment-406241077

1 | kube-dns查看token |

1 | kubectl get secrets -n kube-system |

[download-certs] Downloading the certificates in Secret “kubeadm-certs” in the “kube-system” Namespace

error execution phase control-plane-prepare/download-certs: error downloading certs: error downloading the secret: secrets “kubeadm-certs” is forbidden: User “system:bootstrap:uxzaiw” cannot get resource “secrets” in API group “” in the namespace “kube-system”

To see the stack trace of this error execute with –v=5 or higher

virsh 关闭vm

1 | for i in $(sudo virsh list --name);do sudo virsh shutdown $i;done |