实现方式

https://github.com/kubernetes-sigs/sig-storage-lib-external-provisioner

nfs

kubernetes集群所有节点需要安装nfs客户端

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

1 | helm install -f ./nfs-subdir-external-provisioner/values.yaml ./nfs-subdir-external-provisioner \ |

主动指定名称,或者增加

--generate-name参数让它自动生成一个随机的名称❯ kubectl create ns nfs-provisioner

通过该配置自动创建

–set global.storageClass=nfs-client

删除pod后如何重新挂载原来的卷

1 | apiVersion: storage.k8s.io/v1 |

使用pathPattern路径添加注解

1 | persistence |

yaml

pvc

PersistentVolumeClaim

PersistentVolumeClaim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

{{- if .Values.server.persistentVolume.annotations }}

annotations:

{{ toYaml .Values.server.persistentVolume.annotations | indent 4 }}

{{- end }}

....

volumeClaimTemplates

volumeClaimTemplates

volumeClaimTemplates

volumeClaimTemplates:

- metadata:

name: storage

{{- with .Values.persistence.annotations }}

annotations:

{{- toYaml . | nindent 10 }}

{{- end }}

spec:

accessModes:

.....

1 | persistence: |

容器启动提示对挂载目录没有权限

1 | ❯ mkdir -p /mnt/ssd/LOCAL_NFS/middleware/data-zookeeper-{0..2} |

csi

https://kubernetes-csi.github.io/docs/external-provisioner.html

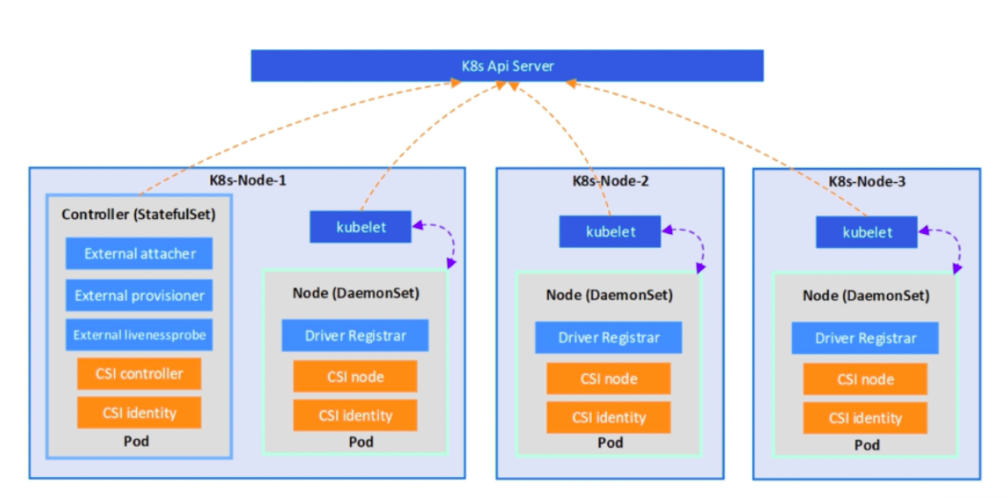

CSI Driver = Node(DaemonSet) + Controller(StatefuleSet)

- 橙色部分:Identity、Node、Controller 是需要开发者自己实现的,被称为 Custom Components。

- 蓝色部分:node-driver-registrar、external-attacher、external-provisioner 组件是 Kubernetes 团队开发和维护的,被称为 External Components,它们都是以 sidecar 的形式与 Custom Components 配合使用的。

| 交互 | 过程 |

|---|---|

| External Provisioner + Controller Server | 创建、删除数据卷 |

| External Attacher + Controller Server | 执行数据卷的挂载、卸载操作 |

| Volume Manager + Volume Plugin + Node Server | 执行数据卷的Mount、Umount操作 |

| AD Controller + VolumePlugin | 创建、删除VolumeAttachment对象 |

| External Resizer + Controller Server | 执行数据卷的扩容操作 |

| ExternalSnapshotter+ControllerServer | 执行数据卷的备份操作 |

| Driver Registrar + VolumeManager + Node Server | 注册CSI插件,创建CSINode对象 |

controller 根据多备份情况部署,可部署多个,主要负责 provision(动态create/delete) 和 attach(mount/umount)工作

node driver registrar 注册功能,每个节点部署,它会在每个节点上进行注册

CSI Node 和 CSI Identity 通常是部署在一个容器里面的,他们和 CSI Controller 一起完成 volume 的 mount 操作

https://blog.csdn.net/2301_76975791/article/details/131098408

s3-csi

https://github.com/ctrox/csi-s3

1 | kind: StorageClass |

mounter

把S3当文件挂载使用

rclone-minio

https://rclone.org/s3/#liara-cloud

~/.config/rclone/rclone.conf

1 | [minio] |

rclone迁移

前提,两台机器的时区及时间要保持一致

1 | rclone sync minio:bucket minionew:bucket |

bucket桶名需要保持一致